September presented a revealing pattern in the field service industry. While industry events showcased ambitious infrastructure plans and transformative technologies, the substance of conversations - both from our leadership team and in hallways at Metro Connect Fall and Tech Expo 25 - centered on more fundamental questions: Why do implementations fail? How do you make AI work with custom data? What happens when workflows don't match operational reality?

This month brought clarity to challenges operators face daily but vendors rarely address honestly. Our leadership spent September explaining the technical and methodological realities behind successful deployments, while our teams at two major industry events heard operators describe exactly those challenges in their own operations.

Why Implementation Is Key

Forward Deployment: The Missing Piece Most Vendors Ignore

In a detailed conversation about AI readiness, CEO Prateek Chakravarty explained why most field service AI projects fail before they deliver value. The problem isn't the technology, it's treating enterprise software deployment like a product transaction rather than a collaborative transformation.

"Physical industries aren't built for standard agile," Prateek explained. "The DNA is not there. The way of operating is different." Zinier's response has been developing value release methodologies that adapt agile principles for industries managing physical infrastructure rather than forcing those organizations to operate like software companies.

Central to this approach are forward deployment engineers who function as "full-cycle custodians" throughout implementation. They run discovery workshops to map workflows, translate business requirements into technical architecture, and critically - stay engaged after go-live to ensure ROI realization. As Prateek described it: "Any phase that goes live, we spend time after UAT, after go-live with the customer, making sure we're shadowing the personas to understand usage and get feedback. What's working? What's not working?"

The methodology challenges conventional wisdom about AI readiness. When asked what organizations should prioritize to be AI-ready in 2025, Prateek's answer focused entirely on mindset: "Being open for change. That's number one. Continuing to be obsessed about solving the problem and not getting enamored with technology."

He emphasized that organizations fail when they treat AI deployment as checking boxes: "Don't do pilots just for the sake of doing pilots and checking the box. Be very methodical, be very prescriptive about why and what problem is being solved here."

The warning extends to how organizations approach software purchases: "Either you're checking the box to do a pilot to meet certain objectives, or you're just treating it as buying a standard product and expecting it to work straight out of the box like SaaS would. It's a mindset shift."

🔗 Read the full Q&A: How to Deploy AI in Field Service

Why No Single FSM Vendor Has Dominated

CPO Andrew Wolf addressed a question that reveals deeper truths about field service complexity: why hasn't a single vendor won the FSM market?

"Field service is very complex, and every field service organization is unique," Andrew explained. "You connect to different systems than your competitors, you organize your field teams differently. Your field workflows, your processes differ."

This organizational complexity creates an impossible choice for most operators: build custom solutions (expensive and time-consuming) or buy off-the-shelf products that force process adaptation. "When you put yourself in a position where you have to match your processes to the software, it's not an ideal situation," Andrew noted. "Service companies really need to be innovative, you need to be able to continue to evolve and iterate."

The traditional build-versus-buy framing misses the actual challenge. Organizations don't need perfectly standardized processes or completely bespoke builds, they need platforms flexible enough to match operational reality while providing enterprise-grade capabilities.

This connects directly to AI deployment challenges. As Andrew explained: "Most of the service providers out there, most of the field service solutions, aren't really capable of leveraging the full power of AI and agents, especially on a customized solution." The moment you customize standard FSM platforms, their AI capabilities typically break because they're designed for out-of-the-box implementations only.

🔗 Read the full Q&A: Why Field Service Can't Be One-Size-Fits-All

The Custom Data Challenge

How AI Actually Works in Production Environments

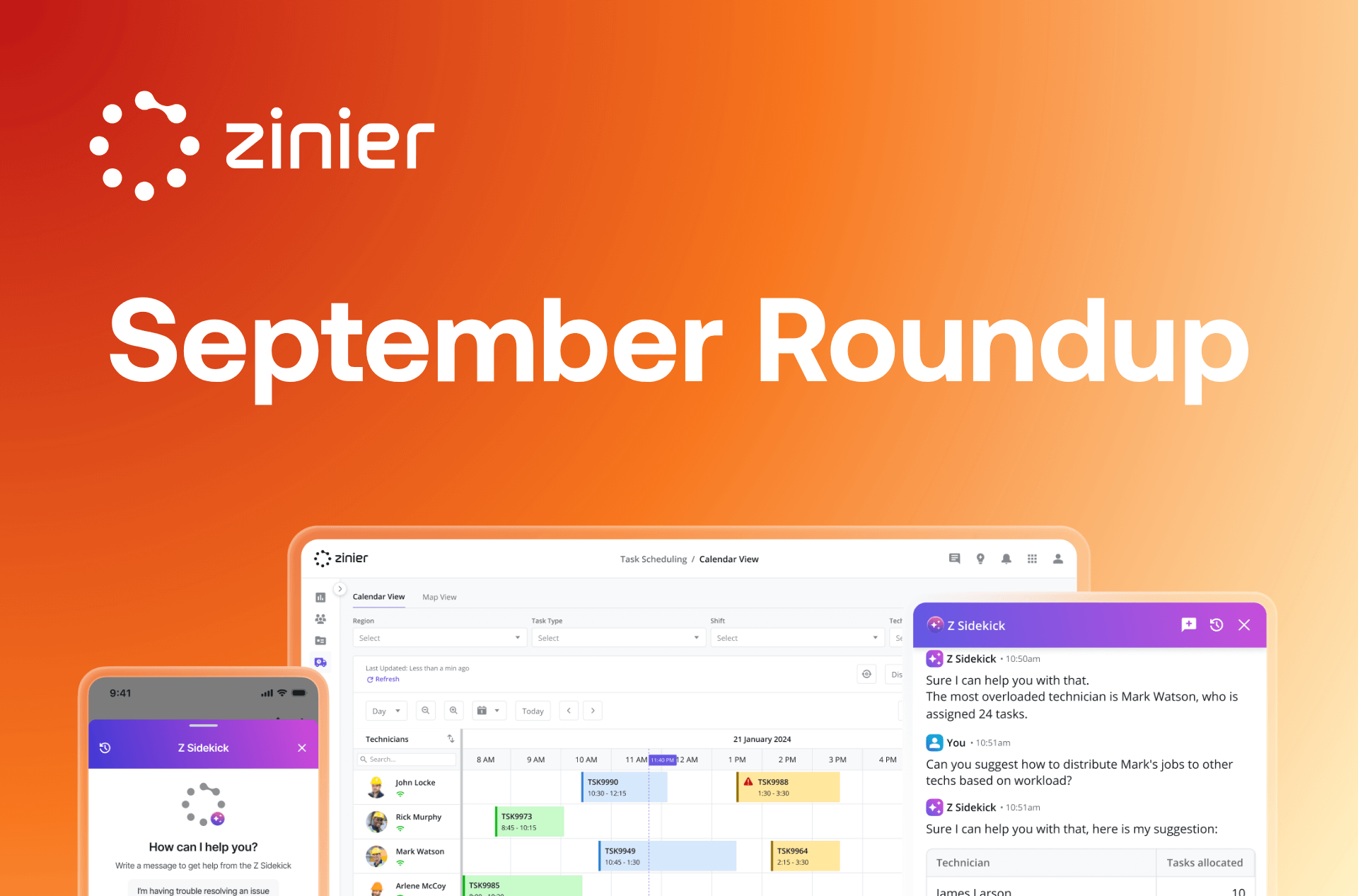

September brought three detailed conversations with Andrew Wolf about AI agents in field service, each addressing different aspects of the implementation reality most vendors gloss over in marketing materials.

The first tackled basic questions: How do AI agents actually work day-to-day? What accuracy can operations teams expect? What happens with offline field operations?

Andrew described agents across two dimensions: capability and domain. "You can think of them as specialized employees who each have a capability - a type of job or set of tools that agent is trained to perform."

Three primary capabilities emerged:

Data queries let agents answer questions and perform analytics on solution data. Example: "What technician had the highest utilization last month in the Northeast region?" The agent accesses relevant data and surfaces information quickly.

Documentation access uses RAG architecture to answer questions about uploaded operational manuals, technical documents, and procedures. This proves particularly valuable for newer employees who haven't built institutional knowledge.

Workflow triggers represent the next evolution - giving agents the ability to initiate actions and automate processes rather than just retrieving information.

On accuracy, Andrew was direct: "With a data type agent, we've been getting them up to well past 95% accuracy." But he emphasized the implementation reality: "It's not like just turn it on and go. Every situation is a little unique, and we can keep doing testing and refinement prior to it being deployed to get the accuracy levels up to what we need."

This refinement process - testing, adjusting, validating before production deployment - is precisely what most vendors don't mention when promising AI transformation.

🔗 Read the full Q&A: How AI Agents Work in Field Service

Why Standard FSM AI Breaks With Custom Data

A second conversation explored the architectural challenge that prevents most FSM platforms from deploying AI on customized implementations.

"We want to be able to deploy AI agents in any solution for any use case using a no-code environment," Andrew explained. "Other FSM solutions simply aren't capable of deploying LLM-based agents on top of custom data and custom solutions. They may have some agentic capabilities working in really specific, narrow use cases, but once you start introducing custom data, then it doesn't really work."

The problem becomes clear when you move beyond standard fields. Traditional platforms might offer a chatbot that works with out-of-the-box task fields, but "that's where it would start getting into difficulty anytime there's custom fields." For organizations running customized implementations, which describes most enterprise deployments, AI capabilities become inaccessible.

Zinier's approach builds a repository of configurable agents that work with custom modules, custom fields, and specialized workflows. "Every client solution is different, every client has different pain points and business needs," Andrew noted. "As a result, the AI use cases to solve those pain points can be dramatically different."

This extends to documentation. While other platforms might provide a chatbot for product documentation, organizations need agents trained on their custom operating procedures and manuals - the actual documents field teams reference when solving problems.

Separating Real AI Opportunities From Rule-Based Automation

Perhaps the most practical September insight came from Andrew's description of AI and automation discovery workshops.

During a recent workshop, a client proposed three "AI use cases" that revealed an important pattern:

Use Case 1: Automatically assign high-priority tasks to nearest qualified technicians. Andrew's assessment: "That can be solved with auto-scheduling or with a specific recommendation from Zinier's Recommendation Center that is completely rule-based."

Use Case 2: Auto-populate timesheets based on task activity and location. "This is automation, but it's actually how the timesheet product was built. It has built-in rules that automatically tag certain activity based on the status of the task."

Use Case 3: Give technicians conversational access to operations manuals relevant to current tasks. This represents genuine AI territory where natural language processing and RAG architecture provide value.

The distinction matters for both technical and business reasons: "For things that are based on business rules, workflow automation and rule-based automation is better than using Gen AI firstly because Gen AI is still relatively expensive compared to other automation, it takes longer, and it's not 100% deterministic at this point."

Organizations benefit from systematic discovery that identifies where AI adds value versus where workflow automation or business rules deliver better results. As Andrew emphasized: "It's our job to interpret the intention behind customer comments and figure out the actual pain point they're trying to solve, then solve it in the best way possible whether it's with Gen AI, recommendations, or customization to the product using platform-based workflows."

🔗 Read the full Q&A: Building AI Agents That Work With Your Real Data

Industry Event Observations: Theory Meets Market Reality

Metro Connect Fall - Austin, Texas

Our team headed to Austin in mid-September for Metro Connect Fall, where the official program focused on hyperscale infrastructure growth and quantum computing advances. The positioning reflected industry ambitions: massive expansion plans, transformative technologies and future possibilities.

But Kevin Mukaj and the Zinier team found themselves having different conversations. After a full day of meetings, Kevin's most common question: "How do field service teams keep up with what's being built?"

Specific challenges emerged consistently:

A telecom operator struggling with workflows that don't fit their fiber expansion realities. They've grown through deployment, but their field service systems can't adapt to new operational patterns.

A company with hundreds of technicians fresh off an acquisition, trying to modernize scheduling and compliance across their now-merged workforce. The integration challenges extend beyond systems to fundamentally different operational approaches.

Regional fiber providers questioning whether systems they adopted only a few years ago can stretch to meet current installation and maintenance volumes. What seemed like forward-thinking investments now feel inadequate.

The pattern revealed itself: "Infrastructure ambitions are accelerating faster than the tools field teams rely on."

Kevin's message to operators struggling with these challenges emphasized Zinier's architectural difference: "Configurable AI agents, adaptive scheduling, and automation that aligns to your data and workflows, not the other way around."

Tech Expo 25 - Washington, D.C.

Two weeks later, George Dibb represented Zinier at Tech Expo 25, the Americas' largest broadband event bringing together 10,000+ telecom leaders. George's positioning emphasized three areas where Zinier's platform delivers practical value:

Operational Excellence: Configurable scheduling and capacity management that operations teams can adjust themselves according to their KPIs - reducing travel time, increasing utilization, setting up automated recommendations for priority exceptions.

Technology Simplification: Event-driven, API-first platform architecture that deploys in weeks rather than months, with no-code/low-code configuration that eliminates legacy platform complexity.

Future-Ready Transformation: AI agents deployable across the platform to complete end-to-end tasks for dispatchers, field technicians, customers, and IT teams.

But again, the substantive conversations happened beyond the booth messaging. George's Day 2 assessment was as follows: "The conversations are remarkably consistent: operators struggling with fragmented systems, seeking consolidation pathways, and looking for practical ways to deploy AI that actually drives efficiency gains."

Cable and fiber leaders wanted to know how to solve current operational challenges. The questions reflected their immediate needs: system consolidation, workflow optimization, and practical AI deployment that delivers measurable efficiency.

The Connection Between Insights and Market Reality

September's pattern became increasingly clear as the month progressed. While our leadership explained implementation methodologies, custom data challenges, and why standard approaches fail with specialized workflows, our teams at industry events heard operators describe exactly those problems in their daily operations.

The alignment wasn't coincidental—it reflected fundamental truths about field service management that the market is beginning to acknowledge more openly:

Implementation methodology matters more than technology sophistication. FSM projects fail because implementations don't account for operational reality, or because platforms force workflow adaptation rather than supporting how field teams actually work.

Custom data breaks standard AI. Most FSM vendors built platforms for out-of-the-box implementations, then retrofitted AI capabilities designed for standard data structures. When organizations customize, which enterprise deployments inevitably require, those AI capabilities become inaccessible or unreliable.

Infrastructure ambitions are accelerating faster than available tools. Whether it's fiber deployment, network maintenance, or post-acquisition integration, operators face pressure to do more with existing resources. When their field service platforms can't adapt to changing operational demands, that gap becomes a competitive disadvantage.

Platform flexibility beats feature checkboxes. Organizations don't need vendors to promise every possible capability out-of-the-box. They need platforms architected for customization, with AI agents that work with custom data structures and workflows that match operational reality rather than software constraints.

Looking Forward

September reinforced that successful field service transformation requires understanding why implementations succeed or fail, not just adopting the latest technology. The market is moving past vendor promises toward operational reality, asking harder questions about accuracy, implementation methodology, custom data support, and practical deployment timelines.

Field service organizations that recognize this shift will approach platform decisions differently. Rather than comparing feature lists or accepting vendor claims about AI transformation at face value, they'll investigate implementation approaches and ask about custom data support.

The hallway conversations at Metro Connect and Tech Expo revealed operators already asking these questions. They're looking for partners who understand field service complexity, acknowledge implementation challenges honestly, and build platforms for operational reality.

That's the work Zinier has been doing - and September's combination of leadership insights and industry event conversations validated that this approach addresses what the market actually needs.

Want to explore how Zinier's approach to implementation and custom data support could work for your organization? Schedule a demo to discuss your specific operational challenges.

.svg)

.svg)

.svg)

%20(2)%20(1).avif)

%20(1).avif)